Robots.txt is an important file that plays a critical role in how search engines crawl and index your website. It provides instructions to web crawlers on which parts of your website they should or shouldn't access. Optimizing robots.txt is crucial for ensuring that your website is visible to the right audience while preventing sensitive or irrelevant content from being indexed by search engines.

If you are using Blogger as your website platform, optimizing your robots.txt file is particularly important since Blogger websites have certain default settings that could affect how search engines crawl and index your website. In this step-by-step guide, we'll explore the basics of optimizing robots.txt for your Blogger website.

We'll cover everything from the basics of robots.txt to the benefits of optimizing it, how to optimize robots.txt, and common mistakes to avoid. By the end of this guide, you'll have a clear understanding of how to optimize your robots.txt file to ensure that your Blogger website is visible to your target audience and indexed correctly by search engines.

Definition of robots.txt

Robots.txt is a small text file that webmasters create to instruct web robots, or search engine crawlers, on how to crawl and index their website's pages. The robots.txt file is placed in the root directory of a website and provides guidelines to web crawlers on which pages or sections of the website they can or cannot access. The robots.txt file tells the search engine which parts of the website should be crawled and indexed and which parts should be excluded.

By following the instructions in the robots.txt file, webmasters can help search engines index their website's content more effectively, prevent the indexing of certain pages or sections of their website, and improve the website's overall SEO performance. The robots.txt file is a simple but powerful tool for webmasters to control how their website is crawled and indexed by search engines.

Importance of optimizing robots.txt for your Blogger website

Optimizing your robots.txt file is crucial for ensuring that search engines crawl and index your Blogger website's content correctly. By providing search engine crawlers with clear instructions on which pages to crawl and which ones to ignore, you can ensure that your website's content is visible to the right audience while also avoiding any indexing issues that could negatively impact your SEO performance.

- Optimizing your robots.txt file is particularly important for Blogger websites because Blogger has certain default settings that can affect how search engines crawl and index your website. By customizing your robots.txt file, you can ensure that your website's content is crawled and indexed according to your specific needs, which can help improve your search engine rankings, increase traffic to your site, and boost your overall online visibility.

- In addition to improving your SEO performance, optimizing your robots.txt file can also help protect your website's sensitive or confidential content from being indexed by search engines. This is particularly important if you have pages on your website that you do not want to be publicly visible, such as pages with personal information or internal company documents.

- Overall, optimizing your robots.txt file is a critical step in ensuring that your Blogger website is crawled and indexed correctly by search engines, which can help improve your website's visibility and online performance.

Understanding Robots.txt

Robots.txt is a simple text file that is placed in the root directory of a website to provide instructions to web robots or search engine crawlers on how to crawl and index a website's pages. The file contains a set of rules that tell web robots which pages or sections of the website they can or cannot access. The robots.txt file is a key element of a website's SEO strategy because it enables webmasters to control which parts of their website are visible to search engines and which parts are not.

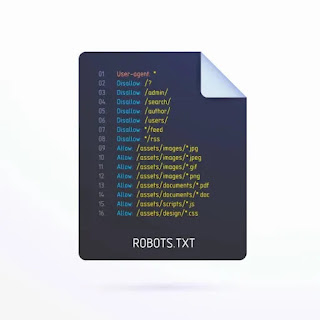

The robots.txt file consists of two main parts: the user-agent and the disallow directives. The user-agent identifies the specific web robot or search engine crawler that the rules apply to, while the disallow directive specifies which pages or sections of the website should not be crawled or indexed by the specified user-agent.

You may want to read this post :

By using robots.txt, webmasters can control how their website's content is crawled and indexed by search engines, which can help improve their website's search engine rankings, increase traffic to their site, and protect sensitive or confidential information from being publicly visible.

It is important to note that while robots.txt can provide guidance to web robots and search engine crawlers, it does not guarantee that they will follow the rules. Some web robots or search engine crawlers may choose to ignore the instructions in robots.txt and crawl or index pages that have been disallowed. As such, webmasters should use additional measures, such as password protection or other security measures, to protect sensitive or confidential information on their website.

Purpose of Robots.txt

The primary purpose of robots.txt is to provide webmasters with a way to communicate with web robots or search engine crawlers about which pages or sections of their website should or should not be crawled or indexed. By specifying which parts of a website should be crawled and indexed, and which ones should not, webmasters can improve their website's search engine rankings, increase traffic to their site, and protect sensitive or confidential information from being publicly visible.

Robots.txt can also be used to improve website performance by reducing the amount of server resources used by search engine crawlers. By disallowing certain pages or sections of the website that are not relevant or useful to search engines, webmasters can reduce the load on their servers and improve their website's loading speed and overall performance.

Another important purpose of robots.txt is to protect website content from being duplicated or scraped by unauthorized parties. By disallowing search engine crawlers from indexing certain pages or sections of the website, webmasters can prevent their content from being copied or used without their permission.

Overall, the purpose of robots.txt is to give webmasters control over how their website's content is crawled and indexed by search engines, which can help improve their website's visibility, performance, and security.

Syntax of Robots.txt

The syntax of the robots.txt file is relatively simple and consists of two main components: user-agents and directives.

User-agents:

The user-agent identifies the specific web robot or search engine crawler that the rules apply to. The most common user-agent is "*" (asterisk), which applies to all web robots and crawlers. However, webmasters can also specify rules for specific user-agents, such as Googlebot, Bingbot, or Yahoo.

Directives:

The directives specify which pages or sections of the website should or should not be crawled or indexed by the specified user-agent. There are two main directives:

Disallow: The disallow directive tells the search engine crawler which pages or directories to exclude from indexing. For example, if a webmaster wants to exclude a directory named "private" from being indexed, the directive would look like this:

User-agent: *

Disallow: /private/

This tells all web robots and crawlers not to crawl or index any pages or directories within the "private" directory.

Allow: The allow directive tells the search engine crawler which pages or directories to include in indexing. For example, if a webmaster wants to allow a subdirectory named "blog" to be indexed, the directive would look like this:

User-agent: *

Disallow:

Allow: /blog/

This tells all web robots and crawlers to allow indexing of pages or directories within the "blog" directory.

It is important to note that the syntax of the robots.txt file is case-sensitive and should be written in plain text. Also, different search engines may interpret the directives differently, so webmasters should always consult the documentation of each search engine for specific syntax guidelines.

Examples of Robots.txt

Here are some examples of robots.txt files:

✓• Allow all robots to crawl all content:

User-agent: *

Disallow:

This example allows all web robots and crawlers to crawl and index all pages on the website.

✓• Disallow all robots from crawling all content:

User-agent: *

Disallow: /

This example disallows all web robots and crawlers from crawling and indexing any pages on the website.

✓• Allow Googlebot to crawl all content, but disallow all other robots: User-agent:

Googlebot

Disallow:

User-agent: *

Disallow: /

This example allows Googlebot to crawl and index all pages on the website, while disallowing all other web robots and crawlers from crawling or indexing any pages.

✓• Disallow a specific directory from being crawled:

User-agent: *

Disallow: /private/

This example disallows all web robots and crawlers from crawling or indexing any pages within the "private" directory.

✓• Disallow a specific file type from being crawled:

User-agent: *

Disallow: /*.pdf$

This example disallows all web robots and crawlers from crawling or indexing any pages with the ".pdf" file extension.

You can check your website robots.txt file just by adding the /robots.txt slug after your website URL like this:

This is My website: https://techbrainaic.blogspot.com then my robots.txt file will be located at: https://techbrainaic.bogspot.com/robots.txt

It is important to note that robots.txt rules can vary depending on the specific needs and structure of each website. Webmasters should always carefully review and test their robots.txt file to ensure that it is properly configured and achieving the desired results.

Why Optimize Robots.txt for Blogger Websites

Optimizing Robots.txt for Blogger websites is important for several reasons:

- Improved search engine crawling: Robots.txt is used to inform search engines about which pages and files on your website should be crawled and indexed. By optimizing the Robots.txt file, you can ensure that search engines are able to crawl and index the important pages and files on your website, which can help improve your search engine rankings.

- Better user experience: A well-optimized Robots.txt file can help improve the user experience on your website. By preventing search engines from crawling and indexing unnecessary pages and files, you can ensure that users are only seeing the most relevant and useful content on your website.

- Faster website loading times: When search engines crawl your website, they use resources such as bandwidth and server processing power. By optimizing the Robots.txt file, you can prevent search engines from crawling unnecessary pages and files, which can help improve website loading times.

- Enhanced website security: Optimizing the Robots.txt file can also help improve website security by preventing search engines from crawling and indexing sensitive pages and files, such as login pages and administrative areas.

Overall, optimizing the Robots.txt file for your Blogger website is an important step in improving your website's search engine rankings, user experience, loading times, and security.

Benefits of optimizing robots.txt

Optimizing Robots.txt can bring several benefits for your website:

- Improved crawling efficiency: Optimizing your Robots.txt file helps search engine bots to crawl your website more efficiently by avoiding the crawling of unnecessary pages or files. This can help save server resources and lead to faster crawl times.

- Better search engine rankings: By optimizing the Robots.txt file, you can ensure that search engines can easily access and index important pages and content on your website. This can improve your website's visibility in search engine results and help increase organic traffic to your site.

- Enhanced user experience: A well-optimized Robots.txt file can improve the user experience on your website by ensuring that search engines only index relevant and valuable content. This can help visitors to find the information they need more easily and quickly, leading to a better user experience.

- Increased website security: Optimizing Robots.txt can help improve website security by preventing search engines from crawling and indexing sensitive pages or files, such as login pages or directories containing confidential information.

- Reduced server load: Optimizing the Robots.txt file can help reduce server load by limiting the number of requests made to your server. This can help improve website performance and reduce the risk of your site crashing due to a surge in traffic.

In summary, optimizing your Robots.txt file is an important step towards improving your website's search engine visibility, user experience, security, and performance.

Consequences of not optimizing robots.txt

Not optimizing your Robots.txt file can have several negative consequences for your website:

Poor search engine rankings: If you do not optimize your Robots.txt file, search engines may not be able to crawl and index important pages and content on your website. This can lead to poor search engine rankings and reduced organic traffic to your site.

Duplicate content issues: Without proper optimization, search engines may crawl and index duplicate content on your site, which can lead to penalties and reduced search engine rankings.

Security risks: If your Robots.txt file is not optimized, search engines may index sensitive pages or files, such as login pages or directories containing confidential information. This can pose a security risk and compromise the privacy of your website visitors.

Poor user experience: If search engines crawl and index irrelevant or low-quality content on your site, this can lead to a poor user experience for visitors. They may have difficulty finding the information they need, leading to frustration and a higher bounce rate.

Increased server load: If search engines are allowed to crawl and index all pages on your site, this can lead to increased server load and slow website performance. This can impact the user experience and lead to a higher bounce rate.

In summary, not optimizing your Robots.txt file can lead to poor search engine rankings, duplicate content issues, security risks, a poor user experience, and increased server load.

How to Optimize Robots.txt for Your Blogger Website

To optimize your Robots.txt file for your Blogger website, follow these steps:

- Identify the pages you want search engines to crawl: Before creating or editing your Robots.txt file, you need to identify the pages and content you want search engines to crawl and index. This can include blog posts, categories, tags, and other relevant pages.

- Use a Robots.txt generator: If you are not familiar with the syntax of Robots.txt files, you can use a Robots.txt generator to create an optimized file for your Blogger website. Simply input the pages you want search engines to crawl and the ones you want to block, and the tool will generate the syntax for you.

- Use the correct syntax: If you choose to create your Robots.txt file manually, it is important to use the correct syntax. Start by specifying the user-agent, which refers to the search engine you want to target (e.g., Googlebot). Then, specify the pages or directories you want to allow or disallow crawling. For example, to block search engines from crawling your admin directory, use the following syntax: "Disallow: /admin/".

- Test your Robots.txt file: Once you have created or edited your Robots.txt file, it is important to test it to ensure it is working correctly. Use Google Search Console's robots.txt tester tool to check for any errors or issues with your file.

- Keep your Robots.txt file up-to-date: As you add new pages or content to your Blogger website, it is important to update your Robots.txt file to ensure search engines can crawl and index the latest content. Regularly review and update your file as needed.

By optimizing your Robots.txt file for your Blogger website, you can improve search engine rankings, prevent duplicate content issues, enhance website security, and improve the user experience for your visitors.

Step-by-Step Guide to Optimizing Robots.txt

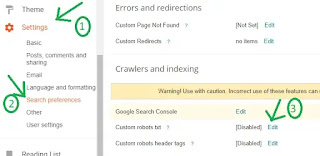

To create a Robots.txt file for your Blogger website, follow these steps:

Click on the "Settings" tab on the left-hand side of the screen.

Click on the "Search preferences" option.

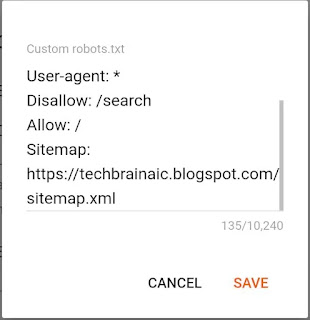

Scroll down to the "Crawlers and indexing" section and click on the "Edit" button next to "Custom robots.txt".

In the text box that appears, type or copy and paste the desired code for your robots.txt file.

Click on the "Save Changes" button to save your robots.txt file.

Note that Blogger automatically generates a default robots.txt file for your website, but you can create a custom file to better control how search engines crawl and index your site. Be sure to double-check your robots.txt file for any errors or syntax issues before saving it.

Common Mistakes to Avoid When Optimizing Robots.txt

Here are some common mistakes to avoid when optimizing robots.txt:

- Blocking important files or directories: Make sure you don't accidentally block important files or directories that search engines need to crawl to properly index your website.

- Disallowing all bots: Avoid using "Disallow: /" to block all bots from your website, as this will prevent your site from being indexed by search engines.

- Using incorrect syntax: Make sure you use the correct syntax when creating your robots.txt file, as even a small error can cause search engines to ignore the file.

- Not updating the file: Regularly check and update your robots.txt file as needed to ensure that search engines can properly crawl your site and that new pages or directories are not accidentally blocked.

- Not testing the file: Always test your robots.txt file to ensure that it is working correctly and that search engines are not being prevented from crawling important pages or directories.

Testing and Validating Your Robots.txt File

Testing and validating your robots.txt file is an important step in ensuring that search engines are able to properly crawl and index your website. Here are some steps you can take to test and validate your robots.txt file:

Related Posts

Use a testing tool: There are a number of free robots.txt testing tools available online that can help you quickly and easily test your file for errors and ensure that it is blocking or allowing access to the correct pages and directories.

Check your website's search engine visibility: Use a search engine to check if your website's pages are being indexed. If you notice that some pages are not appearing in search engine results, you may need to adjust your robots.txt file to allow search engine crawlers to access them.

Validate your file with Google Search Console: Use Google Search Console to validate your robots.txt file and ensure that it is formatted correctly and not blocking any important pages or directories.

Check for syntax errors: Make sure to double-check your file for any syntax errors that could prevent search engines from properly interpreting it.

Monitor website traffic: Monitor your website's traffic and performance after making changes to your robots.txt file to ensure that it is properly allowing or blocking search engine crawlers.

How to Test Your Robots.txt File

To test your Robots.txt file, follow these steps:

- Use the Google Search Console: Google Search Console is a free tool that allows you to test your robots.txt file. Log in to your account and select your website. Click on the "robots.txt Tester" under the "Crawl" tab. Here, you can test your robots.txt file by entering the URL of a page you want to check.

- Use the Robots.txt Checker: There are many free online robots.txt checkers available that can help you test your file. You can use these tools by simply entering the URL of your website.

- Check for Errors: Once you have tested your Robots.txt file, make sure to check for any errors. If you find any errors, fix them immediately to ensure that your file is working correctly.

- Validate Your File: Once you have made changes to your Robots.txt file, you need to validate it to ensure that it is working correctly. Use the Google Search Console or any other online validator to check your file for any errors.

- Monitor Your Site: Even after testing and validating your Robots.txt file, you need to keep an eye on your website to ensure that it is working correctly. Check your website's traffic and search engine rankings regularly to make sure that your site is performing well.

Validating Your Robots.txt File

Validating your Robots.txt file is an important step in ensuring that your website is properly optimized for search engines. It helps to ensure that there are no errors or issues that could prevent search engines from crawling and indexing your website correctly.

To validate your Robots.txt file, you can use various online tools that are available for free. One such tool is the W3C Validator, which checks your file for syntax errors and provides suggestions for improvement. Google also offers a free tool called the "robots.txt Tester" which allows you to test your file and preview how it will affect your website's crawling behavior.

To use the Google Robots.txt Tester, you simply need to access the tool through your Google Search Console account. Once you are in the tool, you can enter the URL of your Robots.txt file and test it against various user-agents. The tool will then provide you with a report that shows any errors or issues that were found, as well as suggestions for how to fix them.

It is important to regularly test and validate your Robots.txt file to ensure that it is properly optimized for search engines and that there are no issues that could negatively impact your website's visibility in search results.

Frequently Asked Questions

What is robots.txt?

Robots.txt is a text file that tells search engine robots which pages or sections of your website to crawl and index, and which pages or sections to avoid.

Why is optimizing robots.txt important for my Blogger website?

Optimizing robots.txt can help improve your website's SEO by directing search engines to crawl and index the most important pages on your site, while avoiding pages that may not be as relevant or important. This can help improve your website's visibility and rankings in search engine results pages.

How do I access my robots.txt file on my Blogger website?

To access your robots.txt file on your Blogger website, go to your Blogger dashboard, click on "Settings", then "Search preferences", and then click on "Edit" next to "Custom robots.txt".

What are some important things to include in my robots.txt file?

It's important to include any pages or sections of your website that you do not want search engines to crawl or index, such as private pages, login pages, or duplicate content. You can also include specific instructions for search engines, such as how often to crawl your site and which sitemap to use.

Are there any common mistakes to avoid when optimizing robots.txt for my Blogger website?

Yes, some common mistakes include blocking important pages or sections of your website, using incorrect syntax in your robots.txt file, and forgetting to update your robots.txt file when you make changes to your website's structure or content.

Can I test my robots.txt file to see if it's working correctly?

Yes, you can use Google's robots.txt testing tool to test your robots.txt file and see if it's working correctly. This tool can help you identify any errors or issues in your robots.txt file that may be affecting your website's SEO.

Conclusion

Optimizing the robots.txt file is an essential part of on-page SEO for any website, including a Blogger website. The robots.txt file helps search engines understand which pages and resources they can or cannot crawl or index.

In this blog post, we have covered the basics of robots.txt, its importance, and the benefits of optimizing it for your Blogger website. We have also discussed the step-by-step guide on how to optimize robots.txt for your Blogger website, including identifying the parts of your website that you want to block from search engines, creating a robots.txt file, and testing and validating it.

It is essential to avoid common mistakes when optimizing the robots.txt file, such as blocking essential pages and resources, not updating the file, and using incorrect syntax. Testing and validating your robots.txt file is also crucial to ensure that it works as expected and does not block any essential pages and resources.

By following the guidelines and best practices discussed in this blog post, you can ensure that your Blogger website is optimized for search engines and can rank higher in search results.

Recap of the importance of optimizing robots.txt for your Blogger website

Optimizing your robots.txt file is crucial for ensuring that search engine crawlers can effectively crawl your Blogger website. By using the right directives in your robots.txt file, you can block search engines from crawling pages that you don't want indexed, such as duplicate content or low-quality pages. This can help prevent your website from being penalized for having poor-quality content and can also improve your website's crawl budget.

In addition to blocking unwanted pages, optimizing your robots.txt file can also help search engine crawlers more efficiently crawl your website, which can lead to improved search engine rankings and increased traffic. A well-optimized robots.txt file can also help ensure that your website's content is indexed properly and appears in relevant search engine results pages.

By following the step-by-step guide outlined in this article, you can optimize your robots.txt file for your Blogger website and help improve your website's SEO. Regularly reviewing and updating your robots.txt file can also help ensure that your website is performing optimally in search engine rankings

Final Thoughts and Additional Resources

In conclusion, optimizing your robots.txt file is an essential part of improving your Blogger website's SEO. By using the tips and steps outlined in this guide, you can ensure that search engine crawlers can effectively crawl your website while also blocking any pages or directories that you do not want indexed.

Remember to regularly review and update your robots.txt file as needed to ensure it stays up-to-date with any changes to your website's structure or content.

For additional resources on optimizing your Blogger website for SEO, be sure to check out the following:

Google Search Console: A free tool from Google that can help you monitor and improve your website's presence in search engine results pages. Ahrefs: A paid SEO tool that provides a wide range of features for optimizing your website's search engine performance. Moz: Another popular SEO tool that provides keyword research, site auditing, and link building capabilities.

By using these tools in combination with a well-optimized robots.txt file, you can help your Blogger website rank higher in search engine results pages and attract more traffic to your site.

Warning!All Our Posts Are Protected by DMCA. Therefore Copying Or Republishing Of The Contents Of This Blog Without Our Permission Is Highly Prohibited!

If Discovered, Immediate Legal Action Will Be Taken Against Violator.

That's All

Thanks for reading our blog

Have a great day ahead!😍

© TechBrainaic

All right reserved